Normal healthy people who aren’t constantly distracted by the tiniest thing GPT (not me), might not yet be aware that the top trending repos on GitHub are full of GPT automation harnesses.

The popular phrase on Reddit is something like, “Chatbots are done for, the future belongs to agents.”

The most popular one right now is Auto-GPT, which connects to your OpenAI account and builds a full project for your recursively. The hype is real with hundreds of YouTube videos on it already, so much so, you would think they are selling NFTs.

Anyway, I gave it a go but couldn’t get it running; I have a Mac M1, there were too many bugs, and I didn’t want to sign up for a Pinecone account (as cool as the idea was).

GITHUB

GitHub – murchie85/GPT_AUTOMATE: An automation harness for GPT to produce a full ready to go code poject.

An automation harness for GPT to produce a full ready to go code poject. – GitHub – murchie85/GPT_AUTOMATE: An automation harness for GPT to produce a full ready to go code poject.

So, I decided to try and build my own one, and here, I want to show you how I did it – so if you don’t like theirs or mine, you can DIY.

Step 1: The Concept of Agents

Because Large Language Models LLMs like GPT3, have limited token counts for input and output, they can’t exactly spit out a whole entire Web server project (with all the html, db, and route files).

You have to kind of cajole it, and remind it of the things you produced earlier; sometimes, you run out of conversation allowance and are never able to give it full context.

So the idea of agents came around (by who? I have no idea); what it is is having specialized wrappers around GPT APIs that prompt it in certain ways and process the results in certain ways, so you can have things like:

- Prompt Agent

- Code generator Agent

- Debugger Agent

Whereas each one manages a certain level of abstraction, this allows you to free up token allowance and break down your project into bits.

Step 2: Design

Ok, so having different agents for different things make sense, but how do you stitch them together, don’t they all need to know what the other ones are doing?

Kind of, the trick is to use the output of one agent, compress it and pass only the relevant bits to the next agent – that’s tricky because you have to figure out a way of clearly defining what matters and what doesn’t.

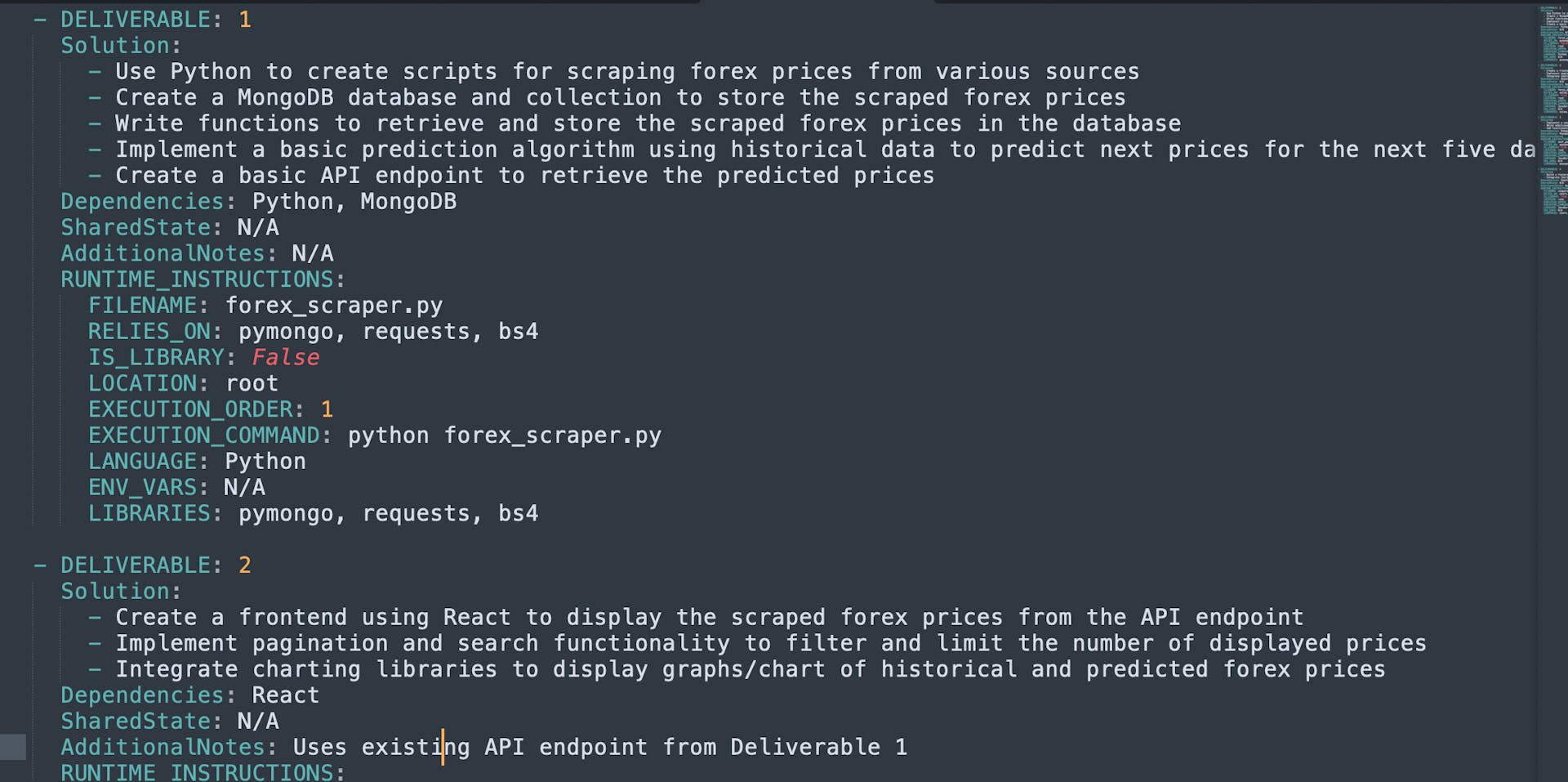

Having the GPT API dice up tasks into Yaml means you can pipe the yaml into your next API call

So, in my case, what I did was have a daddy agent that classified the problem:

if(solvableWithCode):

callAgents

else:

print("Sorry mate, here is a business plan instead")

If the problem could be solved by code, I would ask it to generate YAML like above; this way, each DELIVERABLE would be a separate call to the GPT API in a structured way, allowing it to produce only the code for that one thing.

Step 3: Automating It All

So, we have 3 steps:

- The user states their request “Gimme a trading platform now!”

- Classifier Agent breaks it up into smaller chunks via Yaml.

- Decomposer Agent iterates through each Yaml and builds the code.

K, so what happens if the response is too big, and the code is cut off? In the code, I ensure the prompt tells it to only return 150 lines at a time; I iterate through it in a loop, continuously appending the output to the target file.

So, say there was a webServer project needing manage.py, blogList.js, and index.html, it would build each one out at a time and write to a file.

The Future and My Feelings About It All

This stuff isn’t rocket science; in fact, it’s just plain old turn-the-handle automation. I did a blog called making bread out of noodles where I said that people think we are closing in on AGI, but we aren’t.

We just have a very smart language model that we are trying to stitch together into AGI as if we are trying to make bread out of noodles.

The end product might resemble something like a loaf of bread, but it’s not – that’s how I feel about the automation of LLMs.

Nevertheless, it’s still amazing to be part of this Dev movement; there is huge value in GPT, ChatGPT, and LLMs, and the creativity of people building automation harnesses around them to make people’s lives easier is a great thing to be a part of.

So if that’s your thing, have a bash – reach out to me if you want pointers or have things you want me to improve on.

Note: The project isn’t complete; there is debugging, logging, and other bits to add, but feel free to play around with it, fork it, and or copy and make your own one! I’d love to hear what you come up with!

This article was originally published by McMurchie on Hackernoon.